A new probabilistic method for ranking, allocating bids, and predicting outcomes.

September 28, 2016 by Guest Author in Analysis with 5 comments

This article was written by guest author Thomas Murray. Ultiworld’s coverage of the 2016 Club Championships is presented by Spin Ultimate; all opinions are those of the authors. Please support the brands that make Ultiworld possible and shop at Spin Ultimate!

The purpose of this article is to outline an alternative method for ranking ultimate teams, allocating National Championship bids to the regions, and predicting single-game and tournament outcomes. At the end of this article, I report predictive probabilities derived from this method for each team’s pool placement and tournament finish at the National Championships this week. I will keep technical details in this article to a minimum.1

Like the current algorithm, the proposed method does the following:

- accounts for strength of schedule

- rewards teams for larger margins of victory

- decays game results over time, i.e., down-weights older results

Unlike the current algorithm, there is never a need to ignore games between teams with hugely differing strengths. Recall, the current algorithm ignores blow-out games between teams with rating differentials of 600 or more, but only if the winning team has at least five other results that are not being ignored. Moreover, the proposed method facilitates the following:

- down-weighting shortened games, e.g., 10-7

- probabilistic bid allocation

- prediction

The proposed method is a hybrid model that I’ve developed specifically for ultimate, which alleviates the known flaws of win/loss and point-scoring models that were considered by Wally Kwong last year here on Ultiworld.2

I think the current algorithm and bid allocation scheme are pretty darn good, and overall these tools/procedures have been beneficial for fostering a more competitive and exciting sport. Frankly, we are splitting hairs at this point with respect to ranking, and even bid allocation. The current algorithm doesn’t facilitate prediction, however. I am doing this because I enjoy this sort of thing, and minor improvements for ranking and bid allocation can still be worthwhile. The predictions are interesting in their own right.

I plan to maintain current rankings, and nationals predictions for all divisions in Club and College on my website.

Thank you Nate Paymer for making the USA Ultimate results data publicly available at www.ultimaterankings.net. This was an invaluable resource.

The Method

The key idea is that a win is split between the competing teams based on the score of the game. This is what I call the “win fraction.” In a win/loss model, the winning team gets a full win, or a win fraction of 1.0, and the losing team gets a total loss, or a win fraction of 0.0.

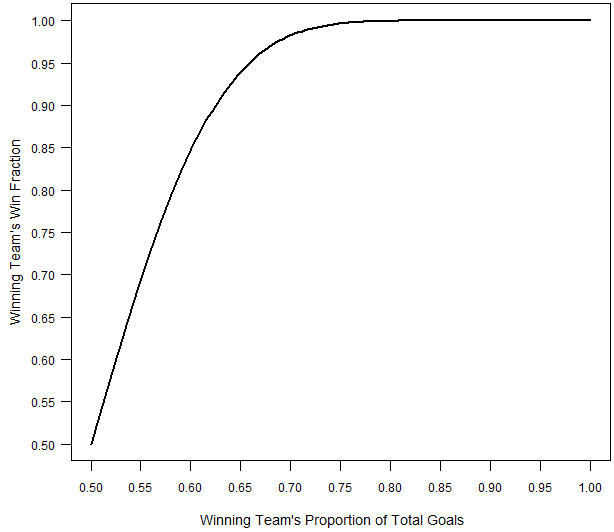

In the proposed method, the winning team gets a win fraction that depends on the proportion of goals that they scored and the losing team gets the remainder. The win fraction for the winning team is depicted in the figure below as a function of their proportion of the total points scored in the match.

Note that there is a diminishing marginal return for larger margins of victory, just like the current algorithm, and in line with our subjective notions.3

Using the win fractions, one can estimate a strength parameter for each team, just like a win/loss model. A point-scoring model also works similarly, but each team is attributed multiple “wins” each game equal to the number of points they scored. The major flaw of a point-scoring model is the marginal return for larger margins of victory is constant, rather than diminishing. The major flaw of a win/loss model is that it doesn’t use all the available information, and tends to perform poorly with small numbers of results like we deal with in ultimate. In particular, win/loss models vastly overrate undefeated teams that played an obviously weak schedule. The hybrid model alleviates both of these issues.

I also assign a weight to each match based on when it was played relative to the most recent week, and the winning score. I down-weight matches by 5% each week, and down-weight shortened matches in proportion to the amount of information they contain relative to a match played to 13.4

Rankings

As is the case typically, although I cannot simply write down the posterior distribution in a tidy equation, I can draw lots of random samples from the posterior distribution and use these samples to learn about the team strength parameters. In particular, each sample from the posterior distribution corresponds to a ranking of the teams.

The actual rankings I report reflect each team’s average rank across all the posterior samples. For example, if Brute Squad is ranked 1st in 40% of the samples, and 2nd in 60% of the samples, then their average rank is 1.8 = 1*(0.4) + 2*(0.6). In contrast, if Seattle Riot is ranked 1st in 60% of the samples, and 2nd in 40%, then their average rank is 1.4 = 1*(0.6) + 2*(0.4). In this case, Seattle Riot would be ranked ahead of Brute Squad. Bayesian methods, sometimes called Monte Carlo methods, are quite popular, in part due to Nate Silver over at 538, and huge advancements in computational power and statistical methodology during past 25 years or so.

Probabilistic Bid Allocation

The current rank-order allocation scheme could still be used based on the rankings. However, this new method naturally facilitates an alternative probabilistic bid allocation scheme. To do this, I calculate the probability that a particular team is in the top 16 (or top 20) using the posterior samples.

By summing these probabilities for all the teams in a particular region, I calculate expected number of top 16 teams in that region, which I call the bid score. To allocate the 8 (or 10) wildcard bids, I sequentially assign a bid to the region with the highest bid score, subtracting 1 from the corresponding region’s bid score each time a bid is awarded. In this way, the bids are allocated to reflect regional strength, accounting for uncertainty in the actual rankings.

Prediction

Because the proposed method relies on the win fraction, which is derived from a working assumption between the probability that a team scores on a particular point and the probability they win the game, I can invert the strength parameters of the two teams into the average probability that one team scores on the other in a particular point, and then simulate a match between the two teams using the resulting point scoring probability.

Extrapolating, I can simulate the entire National Championships in each division. In particular, I can sample one set of strength parameters for the 16 teams competing at nationals, simulate the tournament given these values, and record each team’s finish. Iterating this process, I can calculate the posterior probability of each teams pool placement and finishing place, i.e., 1st, 2nd, semis, and quarters.

Results

Below, I report the top 25 teams in each division at the end of the regular season, along with bid allocation under the two schemes. I then report the predictive probabilities of each team’s placement in their pool, and Nationals finish at this week’s tournament.

Men’s: End Of Regular Season Rankings & Bid Allocation

| Team | Rank | Mean Rank (90% CrI) | Mean Strength Parameter (90% CrI) |

|---|---|---|---|

| Ironside | 1 | 3.66 (1,10) | 7.92 (5.71,10.14) |

| Truck Stop | 2 | 4.11 (1,10) | 7.77 (5.63,9.93) |

| Revolver | 3 | 6.66 (1,17) | 7.44 (5.08,9.84) |

| GOAT | 4 | 7.87 (1,19) | 7.23 (4.93,9.58) |

| Johnny Bravo | 5 | 8.09 (2,17) | 7.13 (4.97,9.30) |

| Chicago Machine | 6 | 9.06 (2,18) | 6.99 (4.86,9.13) |

| Madison Club | 7 | 9.83 (2,19) | 6.90 (4.77,9.06) |

| H.I.P | 8 | 10.27 (1,23) | 6.98 (4.54,9.58) |

| PoNY | 9 | 10.35 (3,20) | 6.84 (4.67,9.02) |

| Doublewide | 10 | 11.36 (3,22) | 6.74 (4.50,8.98) |

| Sockeye | 11 | 11.81 (3,23) | 6.70 (4.42,9.00) |

| Florida United | 12 | 13.48 (3,26) | 6.50 (4.20,8.80) |

| Rhino | 13 | 14.39 (6,24) | 6.38 (4.25,8.50) |

| HIGH FIVE | 14 | 15.00 (6,25) | 6.31 (4.18,8.45) |

| Patrol | 15 | 15.98 (7,26) | 6.21 (4.05,8.35) |

| Prairie Fire | 16 | 16.30 (6,27) | 6.18 (3.96,8.39) |

| Sub Zero | 17 | 17.63 (8,27) | 6.01 (3.89,8.13) |

| Ring of Fire | 18 | 18.05 (7,30) | 5.99 (3.73,8.25) |

| Chain Lightning | 19 | 18.08 (5,31) | 5.98 (3.65,8.32) |

| SoCal Condors | 20 | 19.60 (3,35) | 5.80 (3.34,8.45) |

| Guerrilla | 21 | 20.35 (10,30) | 5.69 (3.60,7.79) |

| Dig | 22 | 25.32 (12,39) | 5.13 (2.95,7.34) |

| Furious George | 23 | 27.10 (15,40) | 4.96 (2.80,7.13) |

| Inception | 24 | 27.45 (18,39) | 4.95 (2.86,7.03) |

| Richmond Floodwall | 25 | 27.83 (1,58) | 5.15 (1.80,9.12) |

| Region | Rank-Order | Probabilistic | Bid Score |

|---|---|---|---|

| GL | 2 | 2 | 1.55 |

| MA | 2 | 2 | 2.00 |

| NC | 2 | 2 | 1.92 |

| NE | 3 | 3 | 3.10 |

| NW | 2 | 1 | 1.49 |

| SC | 3 | 2 | 2.51 |

| SE | 1 | 2 | 1.61 |

| SW | 1 | 2 | 1.54 |

Mixed: End Of Regular Season Rankings & Bid Allocation

| Team | Rank | Mean Rank (90% CrI) | Mean Strength Parameter (90% CrI) |

|---|---|---|---|

| AMP | 1 | 2.80 (1,7) | 6.59 (4.45,8.79) |

| Slow White | 2 | 3.50 (1,9) | 6.41 (4.20,8.66) |

| Drag’n Thrust | 3 | 5.91 (1,14) | 5.86 (3.68,8.06) |

| Seattle Mixtape | 4 | 6.37 (1,15) | 5.80 (3.60,8.04) |

| Steamboat | 5 | 8.81 (2,20) | 5.42 (3.24,7.65) |

| The Chad Larson Exp. | 6 | 9.77 (3,19) | 5.24 (3.18,7.31) |

| Metro North | 7 | 11.87 (4,23) | 5.01 (2.94,7.08) |

| Mischief | 8 | 12.37 (2,29) | 5.05 (2.73,7.40) |

| Alloy | 9 | 13.88 (3,30) | 4.86 (2.62,7.12) |

| Love Tractor | 10 | 14.37 (6,26) | 4.74 (2.69,6.80) |

| shame. | 11 | 15.22 (1,46) | 5.24 (2.12,8.88) |

| NOISE | 12 | 15.96 (5,30) | 4.60 (2.52,6.69) |

| Cosa Nostra | 13 | 16.54 (4,36) | 4.64 (2.35,6.96) |

| Polar Bears | 14 | 17.30 (5,36) | 4.55 (2.32,6.78) |

| Bucket | 15 | 18.18 (4,39) | 4.49 (2.21,6.83) |

| Wild Card | 16 | 18.48 (6,37) | 4.43 (2.26,6.59) |

| BFG | 17 | 19.12 (4,41) | 4.41 (2.12,6.76) |

| Bang! | 18 | 19.58 (5,40) | 4.34 (2.13,6.58) |

| UPA | 19 | 20.95 (3,53) | 4.41 (1.79,7.12) |

| Ambiguous Grey | 20 | 22.06 (9,40) | 4.11 (2.05,6.18) |

| Blackbird | 21 | 26.87 (12,48) | 3.80 (1.72,5.86) |

| Birdfruit | 22 | 30.13 (12,57) | 3.63 (1.46,5.79) |

| 7 Figures | 23 | 30.64 (11,60) | 3.61 (1.37,5.85) |

| Charlotte Storm | 24 | 30.84 (10,59) | 3.58 (1.37,5.83) |

| Dorado | 25 | 31.73 (10,64) | 3.57 (1.26,5.90) |

| Region | Rank-Order | Probabilistic | Bid Score |

|---|---|---|---|

| GL | 1 | 1 | 0.69 |

| MA | 2 | 2 | 1.54 |

| NC | 1 | 1 | 0.71 |

| NE | 2 | 3 | 3.33 |

| NW | 3 | 3 | 3.10 |

| SC | 2 | 2 | 1.77 |

| SE | 2 | 1 | 1.39 |

| SW | 3 | 3 | 2.95 |

Women’s: End Of Regular Season Rankings & Bid Allocation

| Team | Rank | Mean Rank (90% CrI) | Mean Strength Parameter (90% CrI) |

|---|---|---|---|

| Seattle Riot | 1 | 1.95 (1,4) | 10.68 (7.52,13.90) |

| Brute Squad | 2 | 2.56 (1,5) | 10.38 (7.23,13.59) |

| Molly Brown | 3 | 3.90 (1,7) | 9.81 (6.69,12.98) |

| Fury | 4 | 4.01 (1,7) | 9.77 (6.64,12.93) |

| Scandal | 5 | 4.86 (2,8) | 9.46 (6.34,12.62) |

| Traffic | 6 | 6.34 (3,10) | 8.93 (5.80,12.10) |

| 6ixers | 7 | 8.31 (1,18) | 8.49 (4.88,12.42) |

| Nightlock | 8 | 9.61 (6,15) | 7.91 (4.85,11.02) |

| Phoenix | 9 | 10.34 (7,16) | 7.71 (4.69,10.77) |

| Wildfire | 10 | 10.83 (5,19) | 7.69 (4.42,11.01) |

| Heist | 11 | 12.70 (8,19) | 7.21 (4.23,10.23) |

| Showdown | 12 | 13.09 (7,21) | 7.16 (4.01,10.35) |

| Underground | 13 | 13.36 (8,20) | 7.10 (4.05,10.19) |

| Ozone | 14 | 13.87 (8,20) | 6.97 (3.98,9.98) |

| Green Means Go | 15 | 15.82 (10,21) | 6.61 (3.63,9.60) |

| Rival | 16 | 16.65 (9,23) | 6.43 (3.35,9.56) |

| BENT | 17 | 16.95 (10,23) | 6.39 (3.35,9.47) |

| Siege | 18 | 17.06 (10,23) | 6.35 (3.28,9.46) |

| Iris | 19 | 17.51 (10,24) | 6.27 (3.15,9.41) |

| Schwa | 20 | 17.68 (12,23) | 6.27 (3.28,9.29) |

| Nemesis | 21 | 18.42 (12,24) | 6.11 (3.08,9.16) |

| Stella | 22 | 24.83 (19,31) | 4.57 (1.44,7.70) |

| Hot Metal | 23 | 25.28 (21,31) | 4.45 (1.44,7.49) |

| Colorado Small Batch | 24 | 25.44 (20,32) | 4.39 (1.29,7.50) |

| Pop | 25 | 25.96 (21,32) | 4.24 (1.23,7.26) |

| Region | Rank-Order | Probabilistic | Bid Score |

|---|---|---|---|

| GL | 1 | 1 | 0.73 |

| MA | 2 | 1 | 1.56 |

| NC | 1 | 1 | 0.87 |

| NE | 2 | 3 | 3.15 |

| NW | 3 | 3 | 3.14 |

| SC | 2 | 2 | 1.77 |

| SE | 2 | 2 | 1.72 |

| SW | 3 | 3 | 2.95 |

Men’s Probabilities

Pool A

| Ironside | PoNY | Prairie Fire | Ring of Fire | |

|---|---|---|---|---|

| 1 | 57% | 18% | 13% | 11% |

| 2 | 24% | 29% | 24% | 23% |

| 3 | 12% | 28% | 30% | 30% |

| 4 | 6% | 25% | 34% | 30% |

Pool B

| Revolver | Sockeye | Patrol | Doublewide | |

|---|---|---|---|---|

| 1 | 42% | 28% | 12% | 18% |

| 2 | 28% | 28% | 19% | 25% |

| 3 | 18% | 24% | 29% | 29% |

| 4 | 12% | 20% | 40% | 28% |

Pool C

| Truck Stop | Madison Club | HIGH FIVE | Dig | |

|---|---|---|---|---|

| 1 | 43% | 30% | 17% | 9% |

| 2 | 30% | 29% | 24% | 16% |

| 3 | 17% | 24% | 30% | 28% |

| 4 | 9% | 16% | 29% | 46% |

Pool D

| Johnny Bravo | Chicago Machine | H.I.P | Furious George | |

|---|---|---|---|---|

| 1 | 34% | 29% | 28% | 8% |

| 2 | 30% | 29% | 26% | 15% |

| 3 | 22% | 25% | 25% | 28% |

| 4 | 13% | 17% | 21% | 48% |

Championship Bracket

| 1st | 2nd | Semis | Quarters | |

|---|---|---|---|---|

| Ironside | 26% | 14% | 17% | 26% |

| Truck Stop | 16% | 12% | 18% | 28% |

| Revolver | 13% | 9% | 21% | 28% |

| Johnny Bravo | 9% | 9% | 21% | 29% |

| Madison Club | 7% | 8% | 14% | 31% |

| Chicago Machine | 6% | 8% | 16% | 30% |

| Sockeye | 6% | 7% | 15% | 28% |

| H.I.P | 5% | 6% | 16% | 28% |

| PoNY | 4% | 6% | 11% | 26% |

| HIGH FIVE | 2% | 4% | 10% | 26% |

| Doublewide | 2% | 4% | 9% | 26% |

| Prairie Fire | 2% | 4% | 8% | 22% |

| Ring of Fire | 1% | 3% | 7% | 20% |

| Patrol | 1% | 3% | 6% | 20% |

| Dig | 1% | 2% | 5% | 18% |

| Furious George | 0% | 2% | 5% | 17% |

Mixed Probabilities

Pool A

| AMP | Metro North | Ambiguous Grey | Blackbird | |

|---|---|---|---|---|

| 1 | 60% | 22% | 10% | 9% |

| 2 | 25% | 33% | 21% | 21% |

| 3 | 10% | 26% | 31% | 33% |

| 4 | 5% | 20% | 38% | 37% |

Pool B

| Slow White | Alloy | NOISE | Public Enemy | |

|---|---|---|---|---|

| 1 | 53% | 23% | 17% | 7% |

| 2 | 27% | 30% | 28% | 15% |

| 3 | 14% | 28% | 31% | 28% |

| 4 | 6% | 19% | 24% | 51% |

Pool C

| Drag’n Thrust | Steamboat | Love Tractor | No Touching! | |

|---|---|---|---|---|

| 1 | 42% | 35% | 20% | 3% |

| 2 | 32% | 31% | 29% | 9% |

| 3 | 19% | 24% | 33% | 23% |

| 4 | 7% | 10% | 18% | 65% |

Pool D

| Seattle Mixtape | Mischief | shame. | G-Unit | |

|---|---|---|---|---|

| 1 | 26% | 24% | 48% | 2% |

| 2 | 35% | 33% | 25% | 7% |

| 3 | 31% | 32% | 19% | 19% |

| 4 | 9% | 11% | 8% | 72% |

Championship Bracket

| 1st | 2nd | Semis | Quarters | |

|---|---|---|---|---|

| AMP | 22% | 16% | 17% | 27% |

| Drag’n Thrust | 14% | 12% | 16% | 35% |

| Slow White | 14% | 10% | 26% | 32% |

| shame. | 14% | 9% | 26% | 27% |

| Seattle Mixtape | 9% | 10% | 20% | 31% |

| Steamboat | 9% | 9% | 14% | 34% |

| Mischief | 7% | 8% | 18% | 30% |

| Metro North | 3% | 7% | 11% | 28% |

| Alloy | 3% | 6% | 13% | 30% |

| Love Tractor | 2% | 5% | 11% | 32% |

| NOISE | 2% | 4% | 10% | 29% |

| Ambiguous Grey | 1% | 2% | 6% | 19% |

| Blackbird | 0% | 1% | 5% | 18% |

| Public Enemy | 0% | 1% | 3% | 14% |

| No Touching! | 0% | 0% | 1% | 10% |

| G-Unit | 0% | 0% | 1% | 7% |

Women’s Probabilities

Pool A

| Seattle Riot | Nightlock | Iris | Heist | |

|---|---|---|---|---|

| 1 | 86% | 7% | 3% | 3% |

| 2 | 12% | 47% | 18% | 24% |

| 3 | 1% | 25% | 28% | 46% |

| 4 | 1% | 21% | 51% | 26% |

Pool B

| Brute Squad | Wildfire | Showdown | Rival | |

|---|---|---|---|---|

| 1 | 74% | 15% | 7% | 5% |

| 2 | 19% | 35% | 26% | 20% |

| 3 | 6% | 29% | 33% | 32% |

| 4 | 2% | 22% | 35% | 42% |

Pool C

| Molly Brown | Traffic | Phoenix | Green Means Go | |

|---|---|---|---|---|

| 1 | 53% | 34% | 10% | 4% |

| 2 | 31% | 38% | 21% | 11% |

| 3 | 12% | 20% | 37% | 31% |

| 4 | 4% | 9% | 32% | 55% |

Pool D

| Fury | Scandal | Ozone | Schwa | |

|---|---|---|---|---|

| 1 | 47% | 44% | 7% | 2% |

| 2 | 39% | 38% | 16% | 7% |

| 3 | 11% | 14% | 45% | 30% |

| 4 | 3% | 4% | 32% | 61% |

Championship Bracket

| Team | 1st | 2nd | Semis | Quarters |

|---|---|---|---|---|

| Seattle Riot | 38% | 21% | 17% | 21% |

| Molly Brown | 17% | 17% | 18% | 36% |

| Brute Squad | 13% | 9% | 45% | 26% |

| Fury | 12% | 13% | 29% | 33% |

| Scandal | 8% | 12% | 27% | 38% |

| Traffic | 7% | 12% | 15% | 40% |

| Wildfire | 1% | 4% | 11% | 29% |

| Nightlock | 1% | 3% | 8% | 28% |

| Phoenix | 1% | 2% | 6% | 29% |

| Ozone | 0% | 2% | 7% | 28% |

| Showdown | 0% | 1% | 5% | 21% |

| Heist | 0% | 1% | 4% | 18% |

| Rival | 0% | 1% | 3% | 15% |

| Iris | 0% | 1% | 3% | 13% |

| Green Means Go | 0% | 1% | 2% | 16% |

| Schwa | 0% | 0% | 1% | 11% |

If you contact me at 8tmurray at gmail dot com, I would be happy to send you a PDF with the bare bones technical details. I will soon be submitting a manuscript to a statistical journal with these details and an objective evaluation of various methods for ranking ultimate teams. ↩

The first hybrid model was proposed by Annis and Craig (2005) in the paper, “Hybrid Paired Comparison Analysis, with Applications to the Ranking of College Football Teams,” and later simplified by Annis (2007) in the paper, “Dimension Reduction for Hybrid Paired Comparison Models.” These papers discuss the flaws engendered by win/loss and point-scoring models, and I point of some of these flaws below. ↩

I derived the win fraction through a working assumption about the point-scoring process. Namely, the win fraction reflects the % of games that a team would win against their opponent if their probability of scoring on each point is equal to p, and the game was played hard to 13. The observed win fraction in a particular game is calculated by plugging in for p the observed proportion of points that the team scored. This definition for the win fraction is a subjective choice, but I believe it is reasonable, and it results in a parsimonious and useful method. ↩

The above weights and win fractions for each match lead to an objective function, called the likelihood. I take a Bayesian approach, so I also specify the same weakly informative prior distribution for each team’s strength parameter. Doing so ensures the rankings are fair and dominated by the results from the season. Together the likelihood and prior results in a posterior distribution that tells me the likely values for the team strength parameters, and thus the likely rankings. ↩